You may find the following useful if you are developing a microservices based solution, and you have direct responsibility for multiple microservices. You want to avoid tight coupling between the microservices you’re directly responsible for, but you want to take advantage of easy communication between teams.

We got interested in this problem because we were losing time thinking about how to set up our individual integration tests. We wanted to make the decisions easier by defining the approach at a more fundamental level.

The solution concepts were

Using git flow branching terminology

Each service should be small

The develop branch of each service should remain ready to merge to master

Therefore the develop branch of each service should test itself against the master branch of all other relevant services, both closely related and others

Where there are closely related services, it *may* be useful to have functionality on the develop branch of one service alongside the develop branch of another closely related service, before they are both merged to master

There is a cost of providing this capability because it introduces extra test scenarios (see below) and the benefits are only material if there is a reason to withhold code from master

Withholding code from master allows a closely related set of services be tested before it goes to master. So if there ARE actually a closely related set of services AND the cost of testing on master is non trivial (e.g. if this triggers compliance tests and stability there is more important) then the benefits of the extra develop stage can be significant.

Therefore the develop branch of each service should test itself versus the develop branch of the other more closely related services

Defining which services layer on top of which other services can be used to define the sequence of promotion of services, reducing the challenges of providing backwards/forwards compatibility

The above services layering definition provides a standard for which pipelines should own which integration tests, avoiding both duplication of tests and monolithic test pipelines

Using the feature/develop/master branching pattern, the services layering concept and the integration test patterns specified here, it it not necessary to use a configuration file to specify particular versions of services for integration testing. Instead we can use the head of each of the relevant branches.

The solution in practice …

We would sometimes have two services with compatible changes on the develop branch that could not be merged to master independently. Coordinating the simultaneous merge to master is actually not trivial because we could merge the code on the two branches within a few seconds of each other but testing master branch service A against master branch service B is done in pipeline A using the image of service B created by pipeline B. If they both got merged to master simultaneously then if pipeline A just tries to provision the latest image of service B, then there is no guarantee that pipeline B has completed and created a new image. If pipeline B also in turn depended on pipeline A for this change, then potentially it could not have passed.

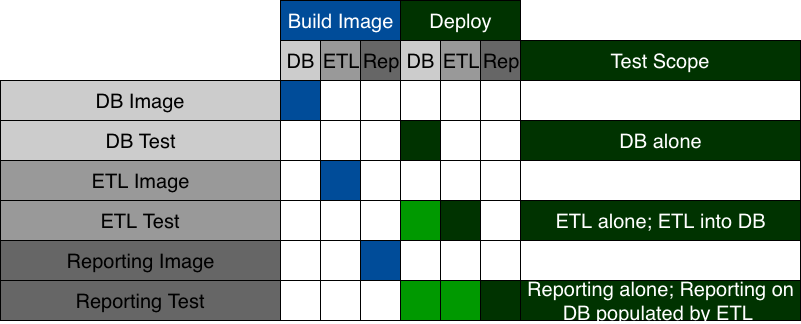

The above challenge is resolved by understanding when services aren’t really independent, they’re layered. In the following example, a database is the most fundamental. An ingest process that writes to the database is layered on that. A reporting process that reads what was written to the database is layered on that in turn. This has a key impact on deployments: the more fundamental services need to be deployed before the services that depend on them. So we deploy the database to create a new column, we deploy the ingest process to make it capable of populating the new column, then we deploy the reporting process to report on the contents of the new column as populated by the ingest process into the database. We don’t try to make it possible to deploy the updated ingest process before the updated database, or the updated reporting before the updated ingest process.

We also avoid trying to synchronise the merging and the deployments of the layers - that is challenging because of how the system is distributed especially in a high availability context. If we can’t solve the compatibility issue then we have a breaking change.

Likewise this layering informs where the tests go. The database has tests which confirm that all tables and views are built correctly and the SQL doesn’t contain syntax errors. It doesn’t need to call any other services. The ingest process needs to provision a database for its integration tests and it checks that it can ingest correctly into the latest image of that branch of the database. Then the reporting process needs to provision an instance of the ingest process and the database and check that its reports work, again using the latest images of the relevant branches of the database. This concept may help resolve apparent needs to duplicate tests between pipelines.

If it still isn’t clear where the tests go, maybe the definitions for which service is which need some attention.

We’ve used a simple rule in the past where integration testers just need to know what’s on develop and whether the develop tests pass. This causes problems though when code is merged to develop and then tested, because the feature can then be identified as incorrect, and may then need to be rolled back. The effect of this is intended to be limited by demonstrating the regression tests on the feature branch before merging, but without a robust way to set up integration regression tests on feature branches, the tests tend not to be thorough enough.

It’s therefore necessary to test the feature branch of a service before merging to develop. It should be tested against the develop branch of related services and the master branch of other services.

Service versus closely related services:

Service versus other, not closely related services:

The test setup patterns can be summarised like this:

FDM - Feature Branch Testing

Triggered by pushing new code on the feature branch or manually when doing exploratory testing. This allows the developer to confirm the service works correctly before passing to the testers and allows the testers to test thoroughly including adding more automated integration tests before approving the pull request to the develop branch.

FFM - Feature Branch Testing - Breaking Changes & Spike Solutions

If the new feature requires changes to other closely related services then the developer could make both of those simultaneously on feature branches to confirm the approach works before pushing any changes to the develop branch. This avoids making changes to the more fundamental services that then need to be rolled back. If the combination has to be delivered as a breaking change then this is also how it will need to be tested. See the section below on breaking changes.

DDM - Service Team Risk Management

This is the main set of tests used by the service team for more in depth testing. By using the develop branch for all services owned by that team, it reduces the number of environments and test runs needed by that team for thorough testing before merging to master. This is triggered periodically, even if there haven’t been any code changes in the service or closely related services. This periodicity sets a limit on the time that can elapse before detecting the impact of changes in the other services that are outside the team’s control. It’s not usually practical to trigger the DDM tests on every change in the whole environment, partly because that would result in so many iterations of tests, most of which wouldn’t find anything, partly because this run of tests is the most resource intensive which makes that problem worse, and partly because it’s not always clear what dependencies there are. Trying to figure them out is not going to be reliable enough, so it’s best to run this kind of test at least daily. It can be triggered from a subset of specific changes as well if those are considered high risk.

DMM - Pre Merge Dependency Checks

Because the service team concentrates on the DDM tests, there is a risk that dependency problems will be caused by merging develop to master for a specific service. In the case of a breaking change, this test will fail until the related services are also merged to master. The service team assigns a service version number at this point.

MMM - Production Deployment Risk Management

Before deploying to production, the complete set of master branch services are tested together. If all tests pass, then that set of versions of those master branch services has been confirmed to work correctly together and is approved for deployment to production.

Other Considerations and References…

If the layering pattern means that the database will be deployed separately from other services, it’s likely to be difficult to test the services which rely on the database with that database config before putting that database config into production, unless the DDM pattern is used. But it’s best to optimise for getting correct changes into production as fast as possible, and if the DDM pattern is adding no value above the DMM pattern, then it should not be used.

We do not trigger all pipelines automatically to retest whenever we change a service that other pipelines depend on. Each service has the responsibility to validate its own contracts, and its own tests are meant to cover that. But because that testing may not be perfect, we still need to rerun the other pipelines. Ideally we would trigger all dependent pipeline tests whenever we make a change, but that uses a lot of resources when the risk is relatively low. So it can be sufficient to run a periodic set of tests, for example nightly. So for example if the database has changed but the ingest process has not been changed, the nightly integration tests will test that combination. Note that this set of integration tests does not need to rebuild the code for example rebuilding docker images, because the code has not changed. It just needs to provision what has already been built. Eliminating redundant builds can save significant time. This makes a case for making it convenient to deploy and run tests independently from creating images.

We don’t need to specify versions when setting up tests in order to converge on a stable solution if we are merging in the right order. Even if we have some kind of breaking change that requires two services with features simultaneously merged to develop in order for develop to pass tests, then we can merge each to develop at about the same time and take the risk that either no tests are run between the two merges (a fair assumption if the DDM tests are nightly, but may invalid if they are triggered of every push) or just accept that we’ll see a failure then a pass on the DDM tests. This is fine because the cost of a develop branch failure is relatively low. We don’t risk production deployments based on git branch merge timings though, and the protection against this is having the MMM tests tag the complete set of services as valid to be deployed together. If we had multiple environments that needed that level of protection, rather than just production, then the versions approved for this could be tracked in a repository, such as with https://opencredo.com/versioning-a-microservice-system-with-git/. With databases because deployments are less convenient to roll back, it could be useful to do this to protect large staging environments from getting broken. We’re avoiding this when we can and using the head of each branch for simplicity.

Higher level summaries of git flow can describe the develop branch as some kind of mixing pot for the developers:

We would suggest some more specific standards:

Regarding the layering concept with the database as the most fundamental service, in the context of a business intelligence system, it’s appropriate that the data modeller who owns the database structure is the leader, initiating deployment of changes to the database that give it the capability to deal with the data that the services will need. The services that need to use the database go second and THEY have the tests that check they work against the database. The database should have enough tests to know it meets its contracts (DbFit is a convenient tool for this), then the secondary services can CHOOSE to trigger against changes to the database. Note that if the secondary services become broken and need the trigger, they are getting early warning that there will be a breaking change. The breaking change is unintentional if found this way - intentional ones would have been identified by the data modeller earlier.

As noted above, it is better to do regression testing on feature branches to avoid breaking develop and having to wind back - faster regression testing is particularly valuable when we are doing more of it. However, some new tests can be done on develop to avoid slowing down the merge of the feature branch to develop. The guiding principle is to keep develop ready to merge to master.