You may find the following useful if you need to describe a team structure in terms of roles, possibly because the team needs to change.

Redshift Serverless

You may find the following useful if you wanted to understand the differences between provisioned Redshift clusters (“Provisioned Redshift”) and Redshift Serverless (“Serverless”).

We got interested in this problem because we wanted to understand when Serverless may be a better fit over Provisioned Redshift clusters in terms of running costs, performance, reliability/availability, management and security.

Key Differences

Both flavours of Redshift use the same query engine and therefore any SQL should work and produce identical results in either setup.

The following table defines differences between the key components:

which is better?

It should be noted first that there are no known plans for AWS to stop the provisioning of Redshift clusters and there is nothing stated in the documentation that Serverless will perform better or cheaper than any existing setup: it will depend on the number and types of workloads.

Nor is anything published by AWS to state how each level of RPUs (Redshift Processing Units) compares to cluster node types.

guidelines

Generally though, the following guidelines can be used:

Provisioned Redshift may be cost effective if it is sized correctly and utilisation is high and constant

Redshift Serverless may be cost effective for “spiky” or less predicable workloads or where fast scaling of resources is required (for example, a process requires a certain SLA that can be at risk when parallel workloads are inflight)

In terms of the first point, recall that Provisioned Redshift will charge when the cluster is running, regardless of whether it is in use, whereas Serverless only charges when queries are running.

predictable workloads

Hence if your workload is a long running batch process that starts/ends around the same time each day, one approach could be to have a process that starts the (existing) cluster, runs the batch and then pauses the cluster to prevent any more running costs (storage costs are separate).

The predictable nature of this workload could allow the cluster to be rightsized for better costs.

ad hoc workloads

However, perhaps workloads are triggered by upstream processes on an ad hoc basis and could run in parallel, demanding more resources than the default setup can cope with, or not run at all.

Provisioned Redshift has the option of Concurrency Scaling whereby once all internal queues have queries running, will offload queued queries to additional capacity outside of the cluster. From experience, it can take time for the offloaded queries to begin to run.

Alternatively, more nodes could be requested (via elastic resizing) but again there is some delay before they are available. Another strategy could be to have separate clusters triggering different workloads.

serverless option

In the above scenario the extra demand from parallel workloads would be served by the built in fast auto scaling functionality. Or, if the workloads require different levels of compute capacity they could be run in their own workgroups with each having the required level of base RPUs to suit performance/costs a la separate Redshift clusters, but without the setup/management/startup overhead.

hybrid

There is nothing to prevent some workloads being run on Serverless capacity and others on Provisioned Redshift.

summary

Our own work with clients has shown that significant savings could be seen when using Serverless over Provisioned Redshift in development environments (where usage was sporadic and experimental in nature) and in production environments where product pipelines ran in a fairly predictable manner, supplemented by small volumes of ad hoc usage (to give a pattern of high spikes in demand and plenty of idle time).

Highly utilised environments where development, test and exploratory work is performed with real data and with little idle time during working hours is better served from Provisioned Redshift, with the cluster started and paused at the start/end of the day. Arguably though, the exploratory work may not be a good fit in an environment that dev/test is also performed.

Gotchas

Minimum duration

Serverless charges for compute (RPUs = Redshift Processing Units) used in a given period, however there is a minimum duration of 60 seconds.

Hence, 10 short duration queries in a minute will be charged as 60 seconds at the RPU rate in use.

If there is a process that checks state very frequently (every 60 seconds or less) it will mean that the Serverless capacity will be in constant use from a billing perspective and could be more expensive than with the same process running on a provisioned cluster.

Of course, Redshift is intended for running larger analytical queries and processes such as maintaining/checking state may be better served outside of Redshift - generally speaking, Redshift, due to its columnar nature and clustered architecture, does not give great performance when running lots of “small” queries (for example, using INSERT to load in large volumes of data versus the recommended COPY from S3).

Also note that as Serverless charges based on RPUs used at a given point, parallel queries that don’t each use all of the RPUs (and require scaling) will be charged as one - Serverless does not charge per query, it charges for compute used at a point in time.

minimum ips

Serverless capacity must be launched in separate subnets across 3 Availability Zones. Each subnet will require the number of IP addresses required to support the level of RPUs requested in the workgroup (plus any additional IPs required if the base level scales up). For example, a base level of 32 RPUs will require 13 IPs whereas at the highest level of 512 RPUs, 133 are needed.

cost monitoring

Costs for running a cluster are fairly predictable as the running rate is known upfront. If a user accidently kicks off an inefficient query or process that takes a long time to complete or be aborted there will be no additional costs (other than any Concurrent Scaling that may occur due to other parallel workloads).

On Serverless, this could lead to surprises and AWS have built in a number of cost monitoring controls:

Views enable the ability to track usage and work out the charges for inflight and completed queries

Session timeouts ensure that open transactions or idle sessions are closed (note - any transaction opened via BEGIN that has no corresponding END will continue to incur charges!)

Query timeouts

Usage limits - a maximum number of RPUs hours can be defined (for example, to set a daily maximum). Attempts to use more can be blocked or alerts can be sent.

Field names, column names, element names, attributes and keys

Business Architecture Diagrams

Architecture Diagram Type Choices

The Focus Schedule

Compound Compromises

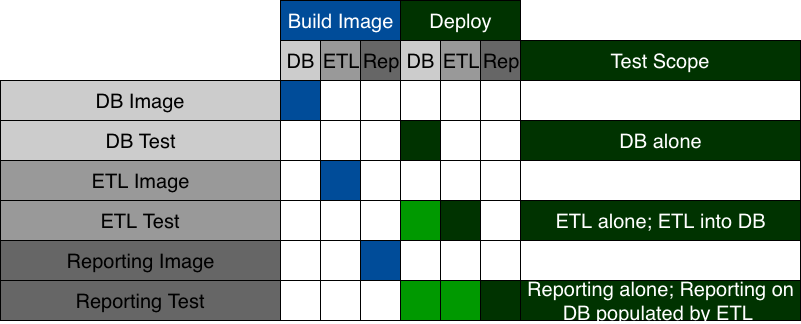

Test Framework Context

You may find the following useful if you are using a test framework such as Fitnesse where the developer/tester interacts with it using a web browser in order to create and run tests, and you want to make the framework available to developers without the inconvenience and inconsistency of local installs or creating a server specifically for it, and you also want to make it available to the CICD platform.

Simplified Service Access Principles

Benchmarking Query Experience Over Recurring Time Periods

Workload Volume

Query Experience Benchmarking

Structuring Data Warehouse Benchmarks

Lightweight Completeness Checks

Feature Epics

You may find the following useful if you can’t easily see which user stories relate to which functionality, so you can’t tell what the impact on delivery is of a problem with a user story, or you can’t easily get a view on when functionality is expected to be ready because you’re not sure what all the relevant user stories are.

Find Problems With Checklists, Solve Them With Services

Automated Integration Test Branch Patterns

You may find the following useful if you are developing a microservices based solution, and you have direct responsibility for multiple microservices. You want to avoid tight coupling between the microservices you’re directly responsible for, but you want to take advantage of easy communication between teams.

The Architect and The Rule of Law

You may find the following useful if you are an architect in an agile team defining your role, or if it is unclear if what the team delivers conforms to the organisation’s architectural principles, or if you are being slowed down by an overload of communication relating to official approvals for delivery.

Aggressimistic Planning

Effective Daily Scrum Meetings

You may find the following useful if you have challenges running effective daily scrum meetings with a larger geographically distributed team. You might be finding that it takes more than 10 minutes, is awkward, shares no new understanding and results in no one doing anything different. Some people may be complaining that you’re not Agile enough and you need a smaller co-located team, and others may have decided that Agile is ineffective.